Brain Computer Interface

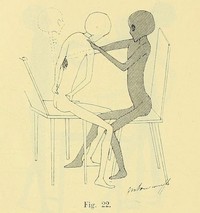

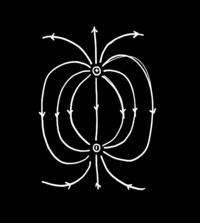

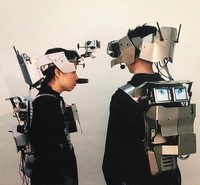

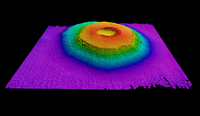

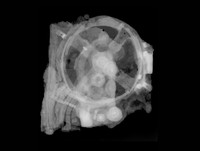

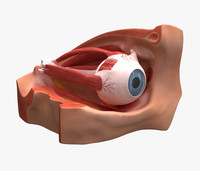

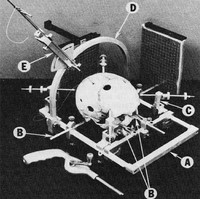

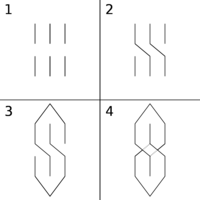

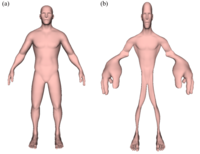

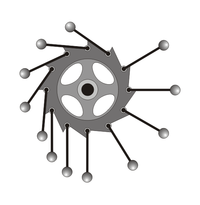

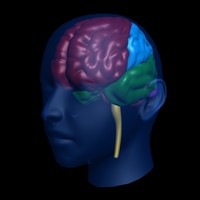

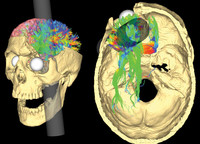

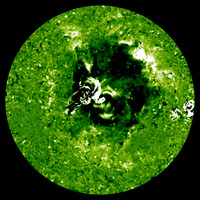

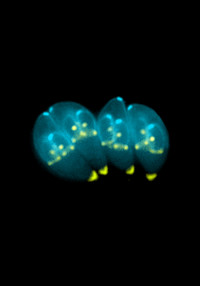

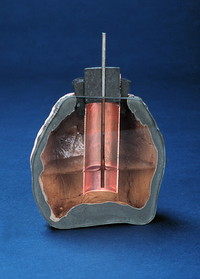

ADDPMP655A direct communication pathway between the brain’s electrical activity and an external device, most commonly a computer or robotic limb. BCIs are often directed at researching, mapping, assisting, augmenting, or repairing human cognitive or sensory-motor functions. Implementations of BCIs range from non-invasive (EEG, MEG, EOG, MRI) and partially invasive (ECoG and endovascular) to invasive (microelectrode array), based on how close electrodes get to brain tissue. Due to the cortical plasticity of the brain, signals from implanted prostheses can, after adaptation, be handled by the brain like natural sensor or effector channels. Following years of animal experimentation, the first neuroprosthetic devices implanted in humans appeared in the mid-1990s.

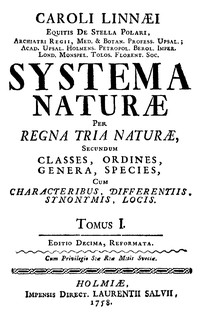

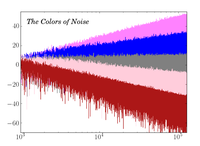

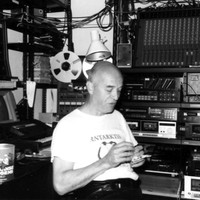

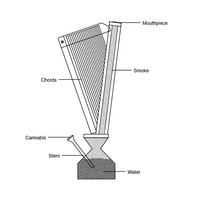

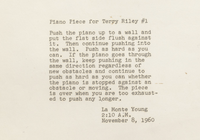

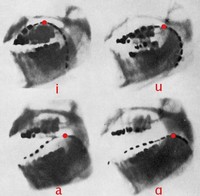

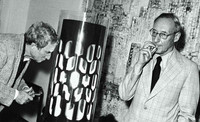

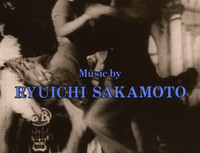

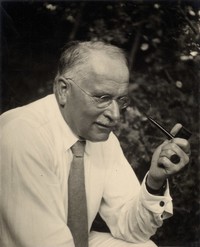

The history of brain–computer interfaces (BCIs) starts with German psychiatrist Hans Berger’s discovery of the electrical activity of the human brain and the development of electroencephalography (EEG) in the early 1920s. Berger recorded human brain activity by means of EEG to analyse the interrelation of alternations in his EEG wave diagrams with brain diseases. One of the earliest examples of a working brain-machine interface was the piece Music for Solo Performer (1965) by the American composer Alvin Lucier. The piece makes use of EEG and analog signal processing hardware (filters, amplifiers, and a mixing board) to stimulate acoustic percussion instruments. To perform the piece one must produce alpha waves and thereby “play” the various percussion instruments via loudspeakers which are placed near or directly on the instruments themselves. The term “brain–computer interface” was coined in scientific literature by computer scientist Jacques Vidal only in 1973, when research on BCIs began at the University of California, Los Angeles (UCLA), and the term appeared in 1973.

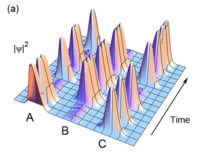

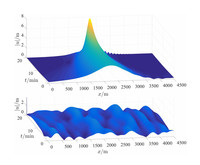

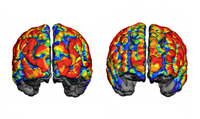

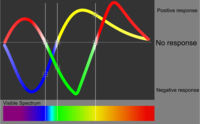

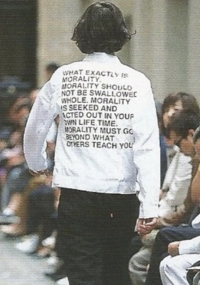

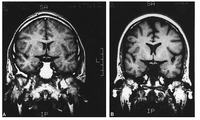

Recently, studies in human-computer interaction via the application of machine learning to statistical temporal features extracted from the frontal lobe (EEG brainwave) data has had high levels of success in classifying mental states (Relaxed, Neutral, Concentrating), mental emotional states (Negative, Neutral, Positive) and thalamocortical dysrhythmia (a theoretical framework in which neuroscientists try to explain the positive and negative symptoms induced by neuropsychiatric disorders like Parkinson’s Disease, neurogenic pain, visual snow syndrome, schizophrenia, obsessive–compulsive disorder, depressive disorder and epilepsy.)

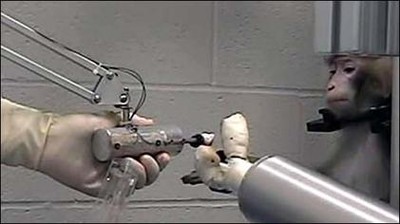

Several laboratories have managed to record signals from monkey and rat cerebral cortices to operate BCIs to produce movement. Monkeys have navigated computer cursors on screen and commanded robotic arms to perform simple tasks simply by thinking about the task and seeing the visual feedback, but without any motor output. In May 2008 photographs that showed a monkey at the University of Pittsburgh Medical Center operating a robotic arm by thinking were published in a number of science journals and magazines. In 2020, American entrepreneur Elon Musk’s Neuralink was successfully implanted in a pig, announced in a widely viewed webcast. In 2021 Elon Musk announced that he had successfully enabled a monkey to play video games using Neuralink’s device.